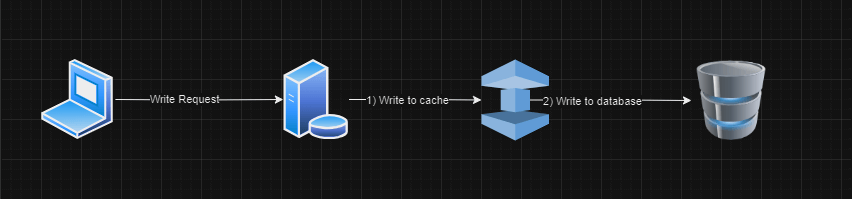

We saw earlier we have a weather forecast app, that is getting popular day by day. Now it is a read heavy app, people check weather updates here frequently. Now it is time to take care of latency and throughput so that users have a better experience.

In this scenario We will implement distributed caching so that it has high performance and can be scaled further as per the requirements.

Caching is the idea of storing frequently accessed data in a fast and accessible layer so that many client requests can be served faster. Large-scale systems will require a large cluster of cache layers, So the idea is to apply well-known distributed system concepts to build a highly available and reliable distributed cache layer.

Caching Concepts:

As we know that frequently accessed data can be cached and retrieved faster. But we cannot store all data in the cache forever as well as cached data must have under evection policy and Time to Leave (TTL) configured well. TTL is the amount of time after which a piece of cached data is evicted from the cache server, it is per-configured in the cache server and automatically handled on server side.

At some point of time, it may need to delete the cache or update the cache as per the requirements so that here comes the eviction policies, Choosing the right eviction policy is critical for the performance of the caching system.

- Least recently used (LRU): is a popular caching algorithm used to manage and evict items from a cache when its capacity is full. The idea behind LRU is to prioritize keeping the most recently used items in the cache and evicting the least recently used ones. It’s worth noting that implementing an LRU cache can be achieved using various data structures, such as a doubly linked list and a hash map. The doubly linked list maintains the order of items based on their access time, while the hash map provides efficient lookup and retrieval of items. For example— your Instagram photos. Recently posted photos are more likely to be accessed by your followers.

- Least frequently used (LFU): is another caching algorithm used to manage and evict items from a cache. Unlike the LRU algorithm, which prioritizes based on recency of use, the LFU algorithm focuses on the frequency of item usage. In LFU, items that are accessed less frequently are more likely to be evicted from the cache. Implementing LFU caching requires maintaining a data structure that keeps track of the usage counters for each item, typically using a combination of a hash map and a priority queue or a heap to efficiently track and retrieve the least frequently used item. For example— Search suggestion cache, this cache will store the most frequently used words and remove the least used ones.

- Most recently used (MRU): is a caching algorithm that prioritizes the retention of the most recently accessed items in a cache. Unlike Least Recently Used (LRU) that focuses on evicting the least recently used items, MRU ensures that the items accessed most recently remain in the cache. Implementing an MRU cache can be achieved using various data structures, such as a doubly linked list and a hash map. The doubly linked list maintains the order of items based on their access time, while the hash map provides efficient lookup and retrieval of items. For example— Facebook friend suggestions. If you remove someone from the suggestions so that they do not appear in the suggestion, the system should delete this most recently removed item from the cache.

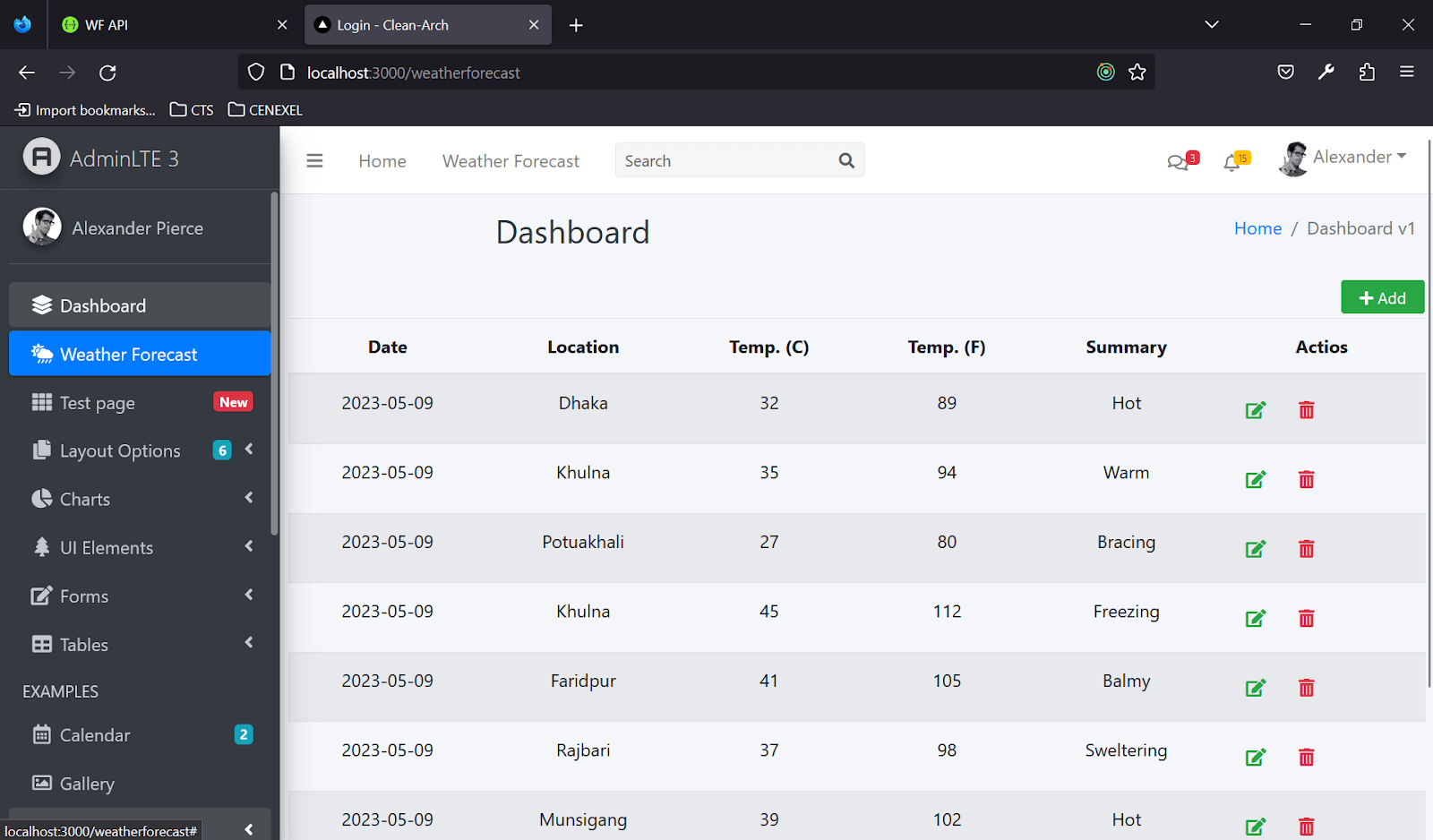

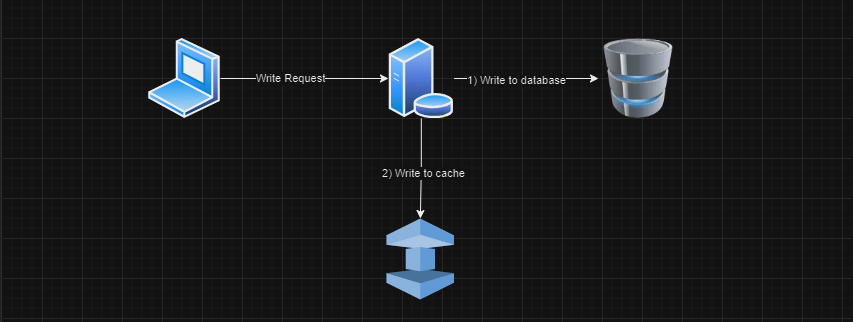

Caching Write Policies:

- Write-through cache: In this cache, when a request is received, data is written on the cache and on the database. This can happen either in parallel or one after another.

- Write-back cache: In this cache, Data is written to the cache and then asynchronously updated to the database. Systems that do not require the latest data to be available in the source of truth can potentially benefit from this caching strategy.

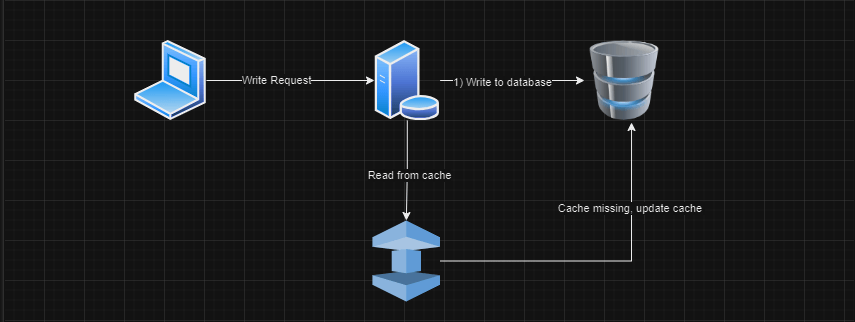

- Write-around cache: In this type of cache, new data is not written to the cache, it is written to the database. When a cache miss occurs, The cache is updated with the required data.

Distributing Cache Using Redis

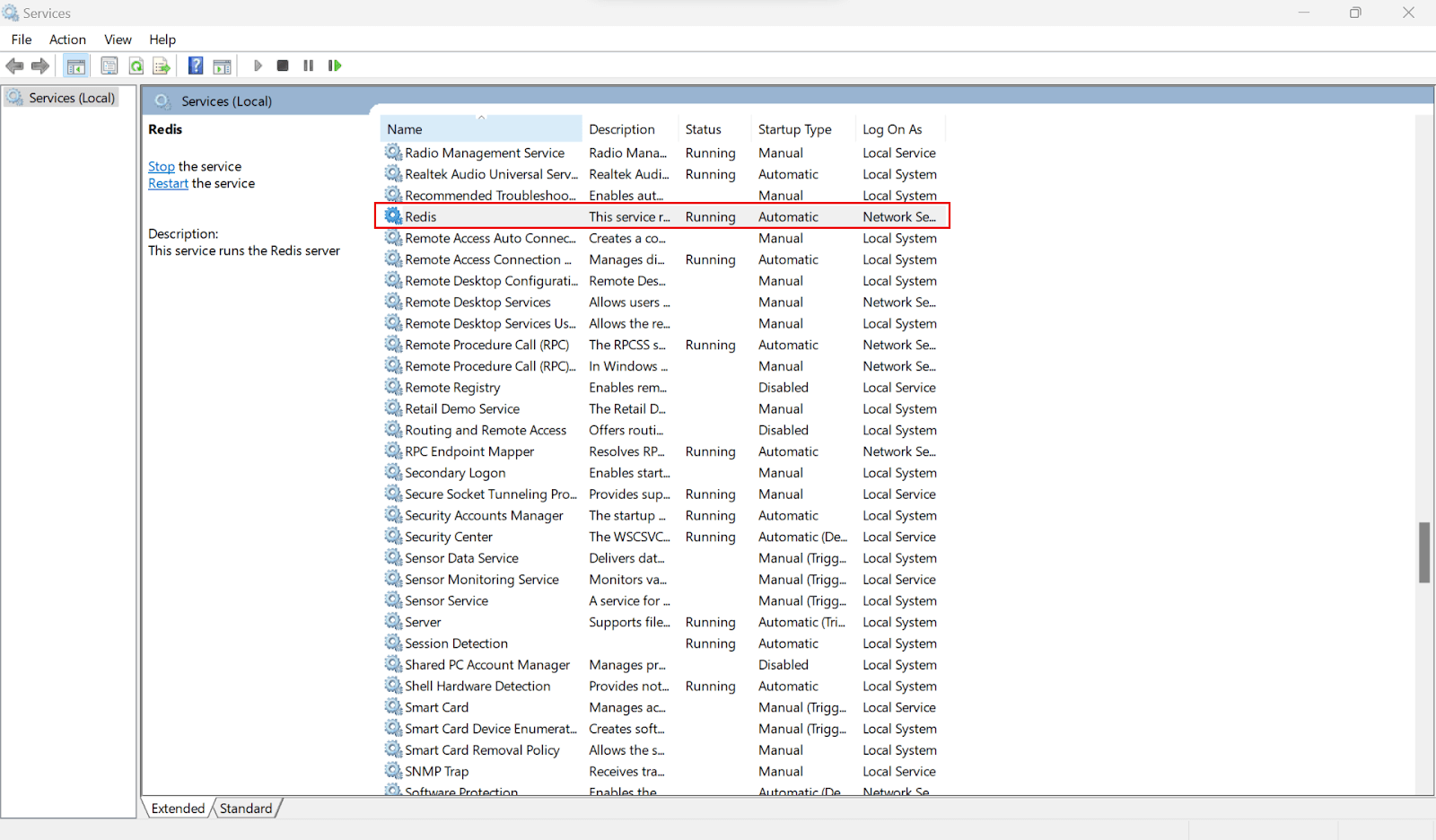

Install Redis:

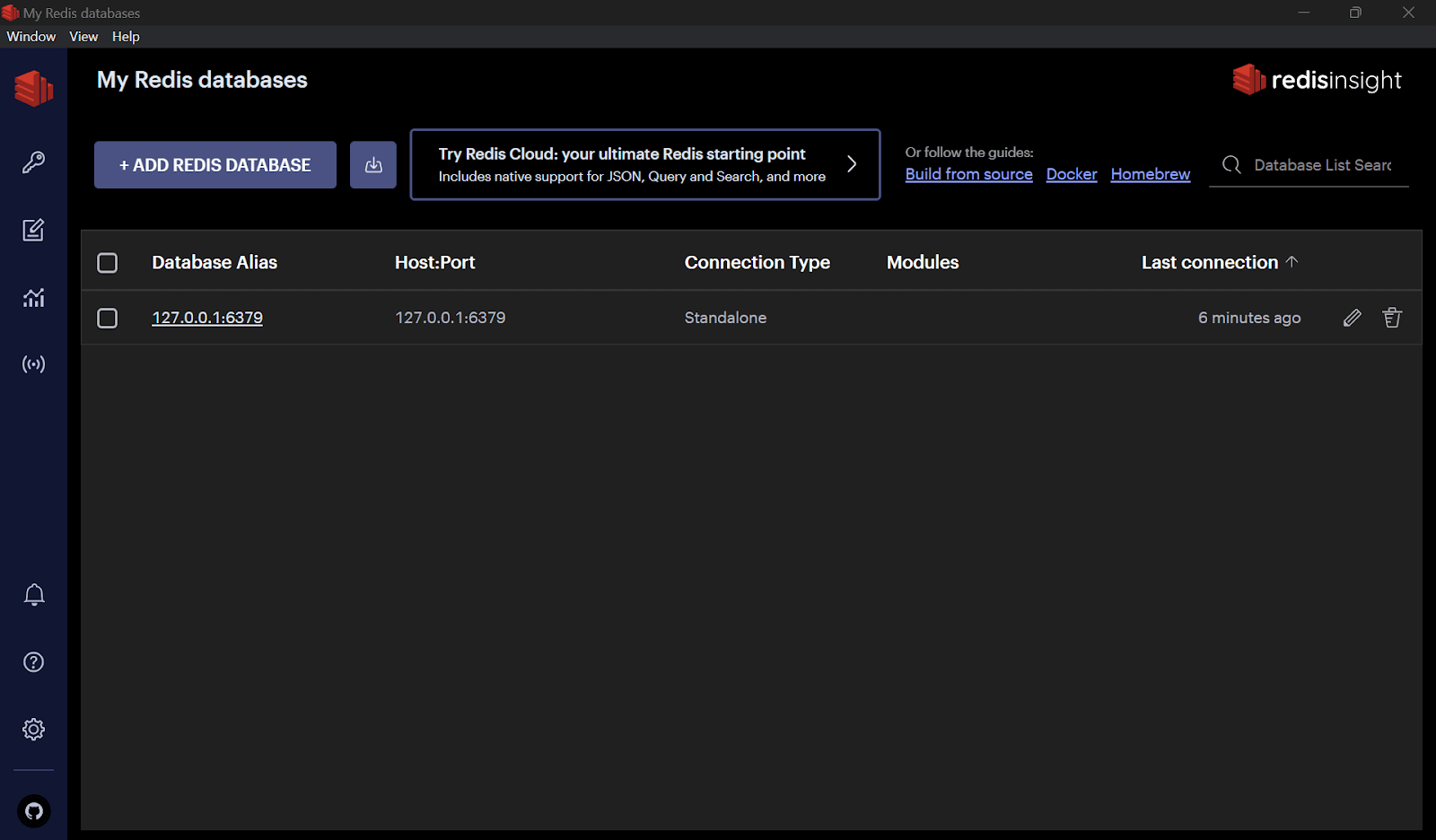

Run RedisInsight and Connect to the Redis server.

Redis is a popular choice for distributed caching due to several key reasons:

- High Performance: Redis is an in-memory data store, it stores data in memory rather than on disk. This enables extremely fast read and write operations, making Redis highly performant for caching purposes. It can handle many requests per second, making it suitable for high-throughput scenarios.

- Flexible Data Structures: Redis provides a wide set of data structures, such as strings, lists, sets, hashes, and more. These data structures allow you to model complex data and perform various operations on them efficiently. For caching, Redis offers key-value pairs, which are ideal for storing and retrieving data quickly.

- Distributed Architecture: Redis supports distributed caching by allowing us to create a cluster of Redis nodes that work together. This cluster architecture provides high availability and scalability. Data can be distributed across multiple nodes, ensuring that the caching system can handle larger datasets and handle failures gracefully.

- Persistence Options: Although Redis is primarily an in-memory cache, it also offers different persistence options. You can configure Redis to periodically save the data to disk or append the changes to a log file. This provides durability and allows Redis to recover data from disk in case of a restart or failure.

- Advanced Caching Features: Redis includes features specifically designed for caching purposes. It supports expiration times for keys, allowing you to set a time-to-live (TTL) for cached data. Redis can automatically remove expired keys, freeing up memory and ensuring freshness of cached data.

- Pub/Sub Messaging: Redis includes a publish/subscribe (pub/sub) messaging system. This can be useful in distributed architectures where changes to data need to be propagated across multiple nodes. For example, when data is updated, you can publish a message to notify other components to invalidate their cache.

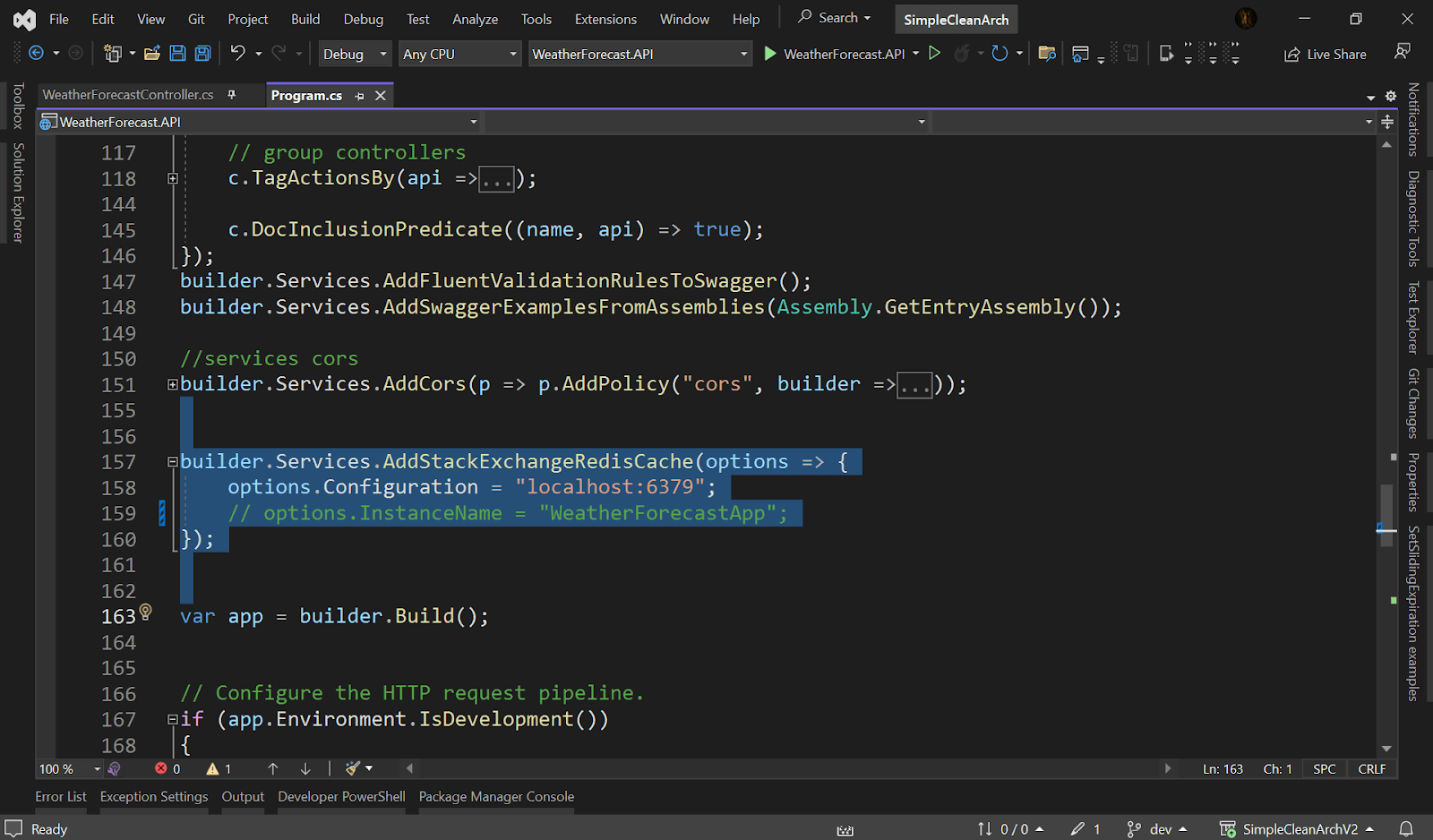

Configure the App with Redis:

Redis cache server is ready, now time to configure our App to work with Redis server.

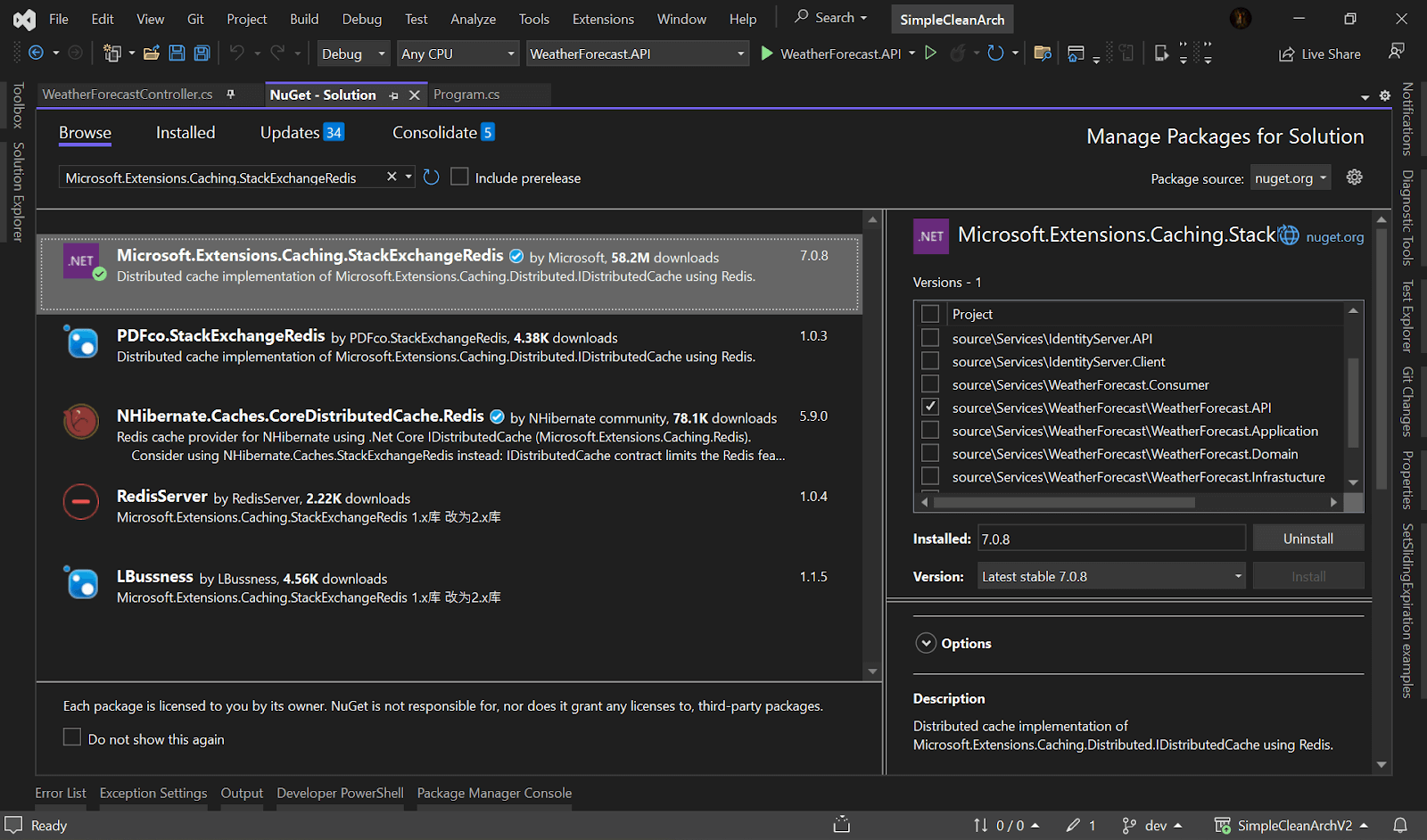

- Add Microsoft.Extensions.Caching.StackExchangeRedis to our project from nuget package.

- Register the service

builder.Services.AddStackExchangeRedisCache(options => {

options.Configuration = "localhost:6379";

// options.InstanceName = "WeatherForecastApp";

});

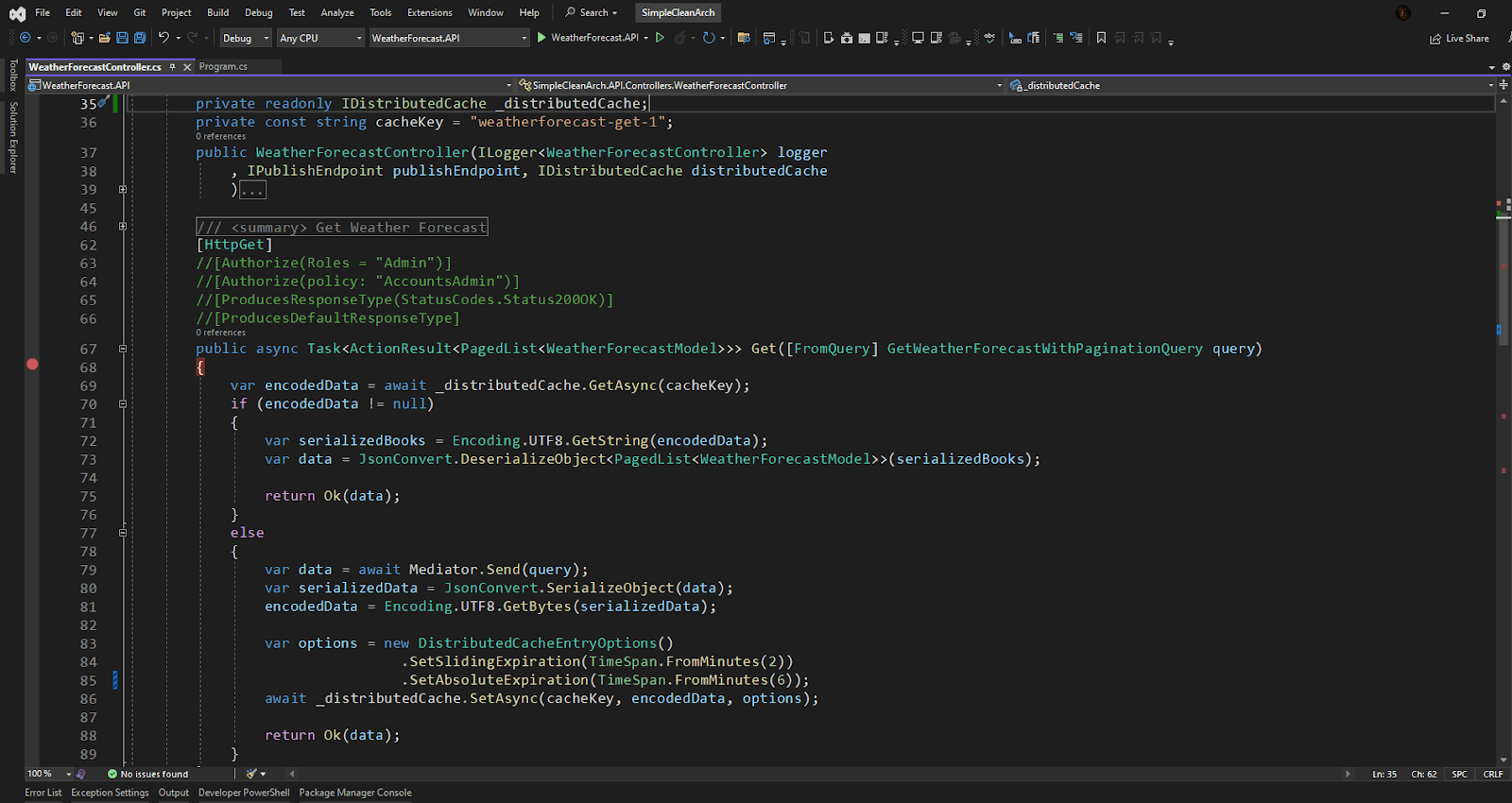

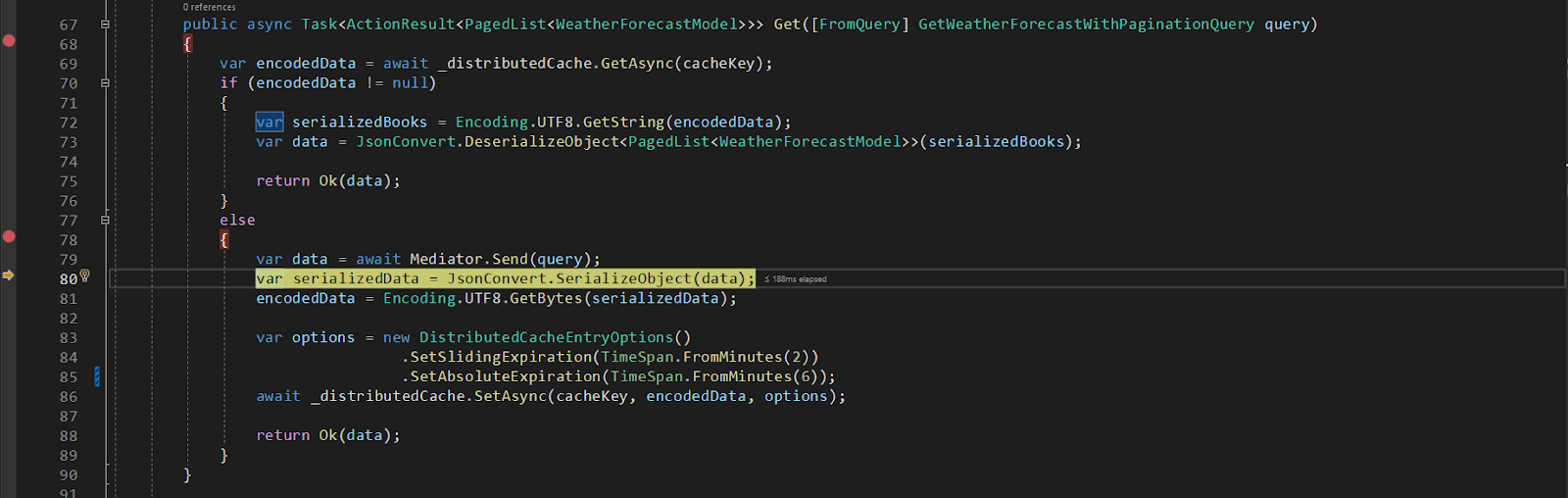

- We will follow the Write-around cache policy in Get action of WeatherForecastController. Here after receiving the request system will check the data is available or not. If it is available in Cache then return the response from cache, if not available then get data from the database and update the cache then return the data. As well as that we will implement the TTL policy.

public async Task<ActionResult<PagedList<WeatherForecastModel>>> Get([FromQuery] GetWeatherForecastWithPaginationQuery query)

{

var encodedData = await _distributedCache.GetAsync(cacheKey);

if (encodedData != null)

{

var serializedBooks = Encoding.UTF8.GetString(encodedData);

var data = JsonConvert.DeserializeObject<PagedList<WeatherForecastModel>(serializedBooks);

return Ok(data);

}

else

{

var data = await Mediator.Send(query);

var serializedData = JsonConvert.SerializeObject(data);

encodedData = Encoding.UTF8.GetBytes(serializedData);

var options = new DistributedCacheEntryOptions()

.SetSlidingExpiration(TimeSpan.FromMinutes(2))

.SetAbsoluteExpiration(TimeSpan.FromMinutes(6));

await _distributedCache.SetAsync(cacheKey, encodedData, options);

return Ok(data);

}

}

SetSlidingExpiration: Cached items have their expiration time extended each time they are accessed or updated, allowing frequently used items to stay in the cache while less frequently used ones eventually expire and are removed.

SetAbsoluteExpiration: Cached items have a fixed expiration time, and they are removed from the cache as soon as that time duration has passed, regardless of how often they are accessed or updated.

In short, SetSlidingExpiration extends the expiration time of cached items with each access or update, while SetAbsoluteExpiration sets a fixed expiration time for items, regardless of their usage.

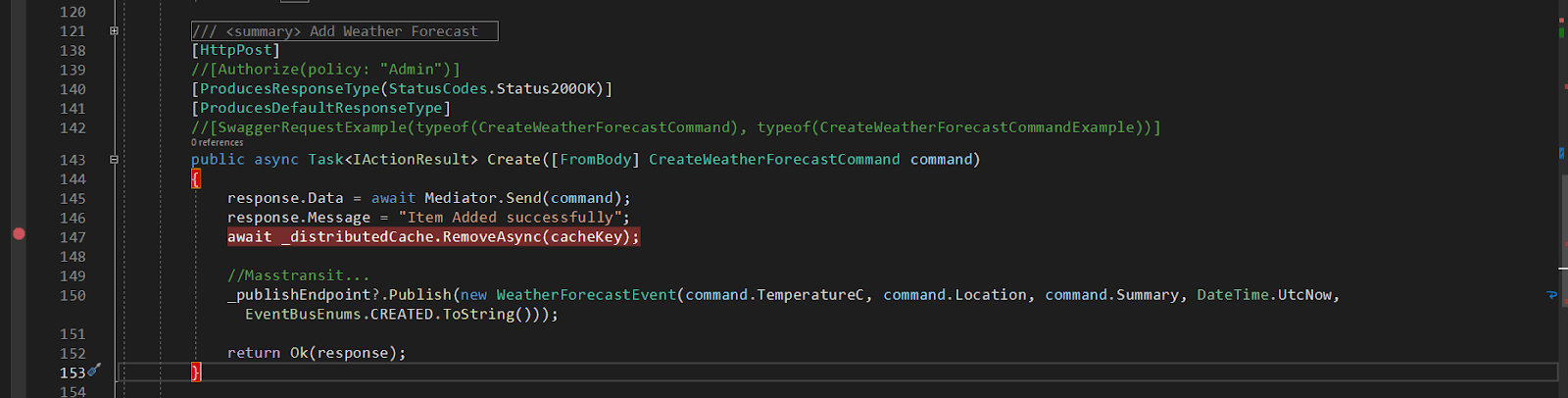

- After adding a new item, we will remove the existing Cache

public async Task<IActionResult> Create([FromBody] CreateWeatherForecastCommand command)

{

response.Data = await Mediator.Send(command);

response.Message = "Item Added successfully";

await _distributedCache.RemoveAsync(cacheKey);

//Masstransit...

_publishEndpoint?.Publish(new WeatherForecastEvent(command.TemperatureC, command.Location, command.Summary, DateTime.UtcNow, EventBusEnums.CREATED.ToString()));

return Ok(response);

}

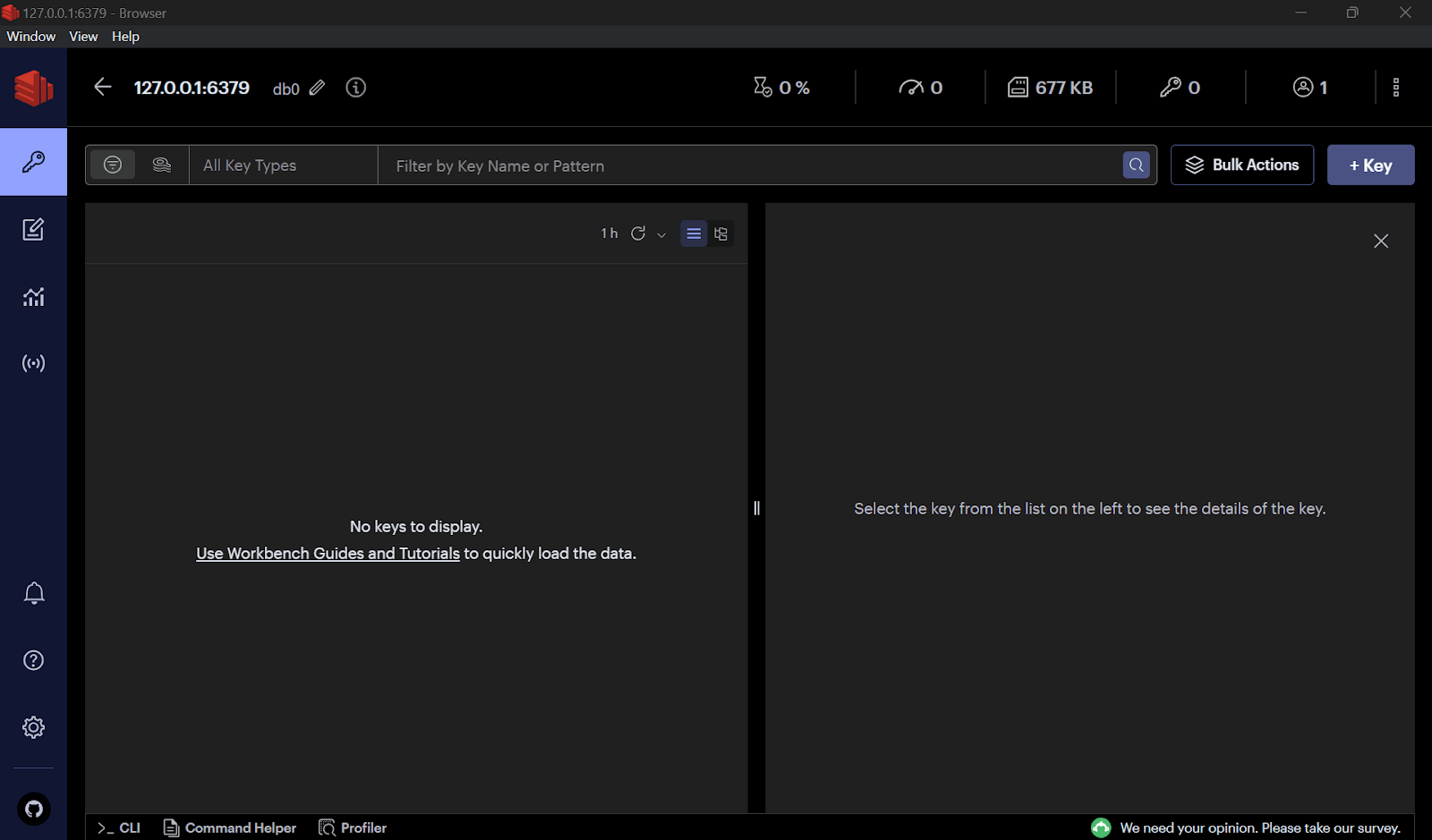

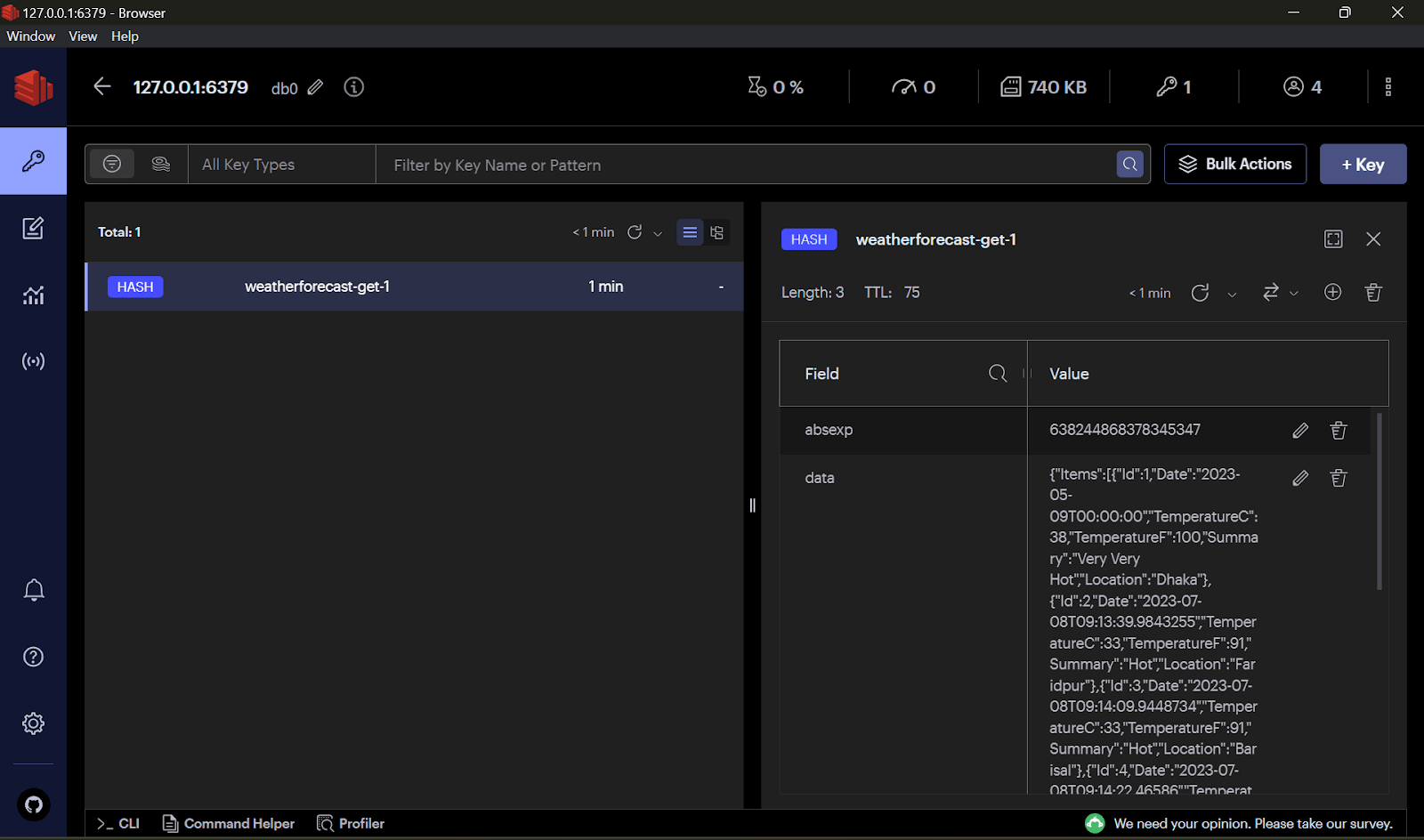

- Now run the app and call Get action, first of all check that our Cache is empty

So that system should get data from the database then Cache will be updated.

Now check the Cache again— Yes, data is available in cache.

If the system does not get any request within the next two minutes, then as per TTL this Cache will be removed automatically. After six minutes it also be removed though it is active.

I hope this will help us to understand the purpose of Caching and its implementation strategy. For further analysis and review the source is here. Thanks for reading.